In the previous blog, we explored what it means to be creative as a scientist, particularly within the life sciences, and defined it as the ability to see new patterns, frame old problems in new ways, and design systems and frameworks that did not previously exist. Despite its importance, scientific creativity is often stifled by mental overload from repetitive, mundane tasks, a systemic deprioritization of exploratory work, and technical barriers that impede fluid collaboration. These constraints not only slow progress and drive up costs, but also lead to frustration among highly skilled scientists whose creative potential remains underutilized.

A powerful and increasingly accessible remedy lies in AI-driven automation. AI can take over routine, time-consuming tasks like data cleaning, annotation, and formatting, while also acting as a creative partner that helps researchers generate ideas, explore alternatives, and simulate outcomes. But embracing this opportunity is not simply a matter of adding a new tool. It requires a mindset shift to adopt AI technology in every process of scientific creativity.

Unlike earlier technological upgrades, such as using the internet alongside libraries for literature search, integrating AI into scientific workflows requires a systemic overhaul. Legacy systems that were merely adequate must give way to methods optimized for speed, scale, and insight. Accepting AI as both executor and collaborator calls for scientists to rethink not just how they work, but how they think.

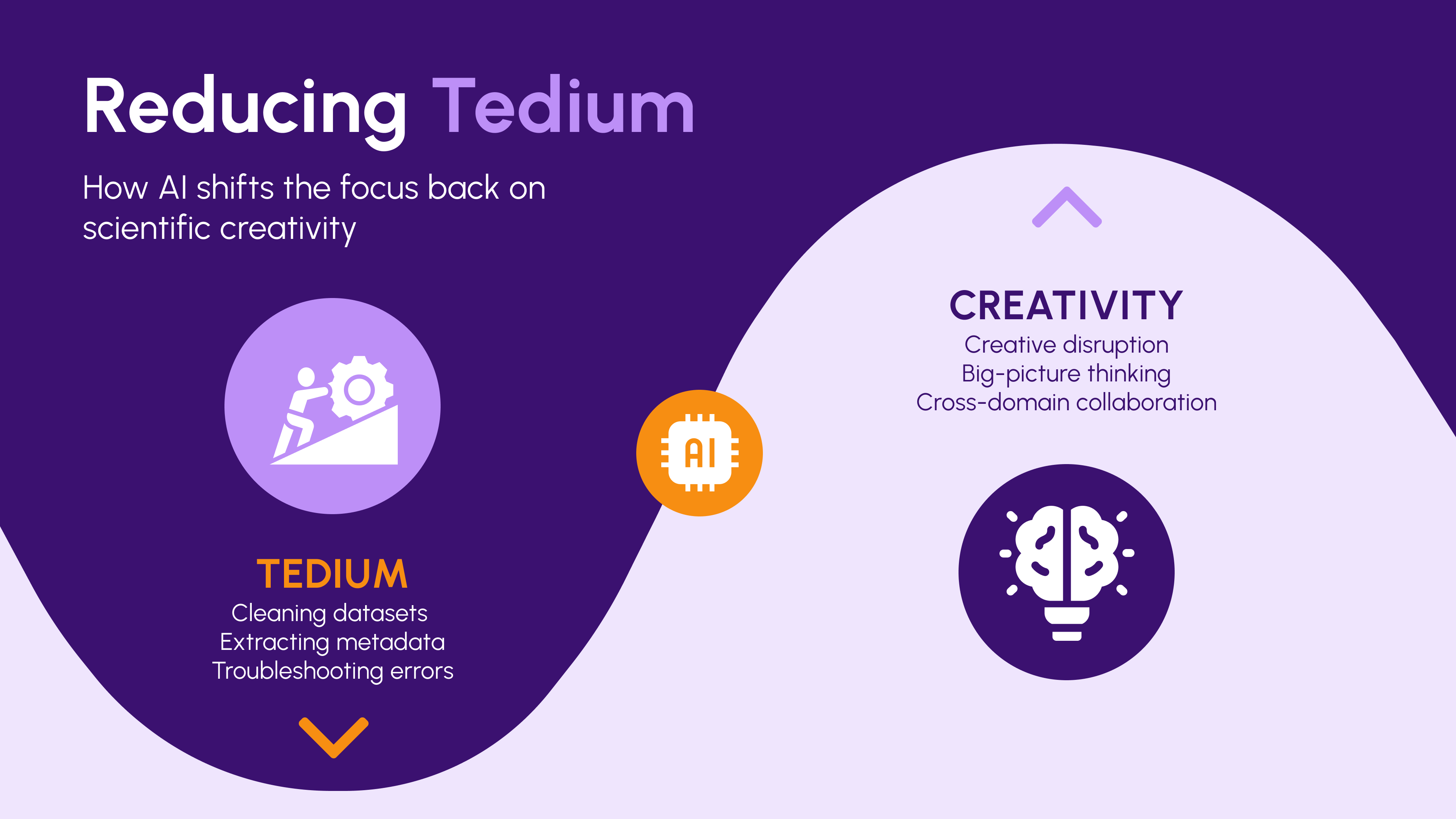

Much of the day-to-day work in scientific research is not driven by insight or imagination, but by necessity. Scientists across the life sciences routinely spend hours on tasks like cleaning large-scale omics datasets, standardizing clinical records, annotating images, formatting spreadsheets, extracting metadata, or troubleshooting instrument output. These tasks, while essential, are rarely intellectually stimulating. They demand precision, repetition, and time, often at the cost of energy that could otherwise go toward formulating questions, interpreting results, or designing new experiments.

This burden of tedium is a creativity bottleneck. When researchers are preoccupied with mechanical execution, their capacity for exploration and big-picture thinking narrows down. Over time, this erodes not only productivity but also morale, especially among scientists whose training and instincts are oriented toward solving complex, open-ended problems.

This is precisely where artificial intelligence and automation can play a transformative role. One of the most immediate and measurable benefits of AI in the life sciences is its ability to take over these repetitive, time-consuming tasks. AI-powered systems, like Elucidata’s Polly, can now execute in hours what would previously take days or weeks. And they do so reliably, at scale, and without the variability that often comes with manual workflows.

But the value of this automation goes far beyond time savings. When scientists are no longer burdened by formatting errors or harmonization mismatches, they regain the mental space to be bold: to ask deeper questions, follow speculative leads, revisit older data with new perspectives, or design more ambitious studies. In this way, the time saved becomes time redirected toward innovation.

As scientific workflows become more complex, AI systems are moving beyond their role as automation engines to become true collaborators in the creative process. This co-creative function of AI, sometimes described as “Centaur” and “Cyborg” modes of collaboration,[1] represents a paradigm shift where scientists and AI systems jointly contribute to ideation, experimentation, and interpretation.

AI tools like large language models (LLMs) can serve as brainstorming companions. For researchers facing creative roadblocks or exploring unfamiliar areas, AI can generate hypotheses, suggest unexpected links between concepts, or propose alternative explanations and experimental designs. For instance, studies have shown that participants using tools like ChatGPT produced stories that were more novel and creative than those written without AI, especially among those with lower baseline creative ability.[2]

AI’s ability to equalize performance across team members, by enhancing idea originality and reducing variance, makes it a valuable tool in team-based science. In creative tasks, the least original team member often limits group success. By helping those who struggle with creative ideation reach higher baselines, AI can raise the collective output of scientific teams.

AI currently excels at divergent tasks like idea generation but lags in convergent tasks like evaluating and refining those ideas. Researchers still play the crucial role of validating, interpreting, and integrating outputs. The future of scientific creativity lies in hybrid models, where humans guide and critique AI outputs while leveraging AI’s expansive recall and combinatorial power.[1]

Professional-level creativity, the kind that leads to breakthroughs, innovative tools, or new therapeutic frameworks, is already being augmented by AI.[3] Examples include AlphaFold’s revolution in protein structure prediction or generative design tools for synthetic biology constructs.[4] These tools empower scientists to go beyond human cognitive limitations, simulating thousands of pathways or designs that would take humans months to compute.

Though empirical studies on AI’s role in domain-changing innovations are limited, tools like AlphaFold and generative chemistry platforms hint at the future. They are enabling insights and breakthroughs that may well underpin the next generation of Nobel-worthy discoveries. In these scenarios, AI functions not merely as a support system, but as a force multiplier for insight, hypothesis generation, and system-level thinking.[5]

AI can also guide the how of creativity. Tools can be designed to scaffold researchers’ creative process, offering prompts to consider alternatives, regulate frustration during trial-and-error, or recommend paths based on individual working styles and past project data. This kind of support enhances not just what scientists create, but how effectively they bring those ideas to life.

In sum, AI is evolving into a trusted collaborator that contributes to creativity across all levels, from the lab bench to high-level strategic discovery. But to harness its potential fully, researchers must adopt a mindset that balances openness to AI’s suggestions with critical oversight, ensuring the ideas remain novel and meaningful.

While AI is often celebrated for its ability to support productivity and execution, one of its more powerful and underappreciated roles lies in its capacity to disrupt entrenched thinking. Scientific inquiry, especially in high-pressure and time-bound environments, can become risk-averse and narrowly focused, drifting toward safe hypotheses, familiar methodologies, and predictable outcomes. Over time, this can lead to creative stagnation.

AI offers a counterweight to this tendency. Generative models are trained on vast, diverse corpora across disciplines and domains, allowing them to produce unusual associations, unlikely pairings, and thought-provoking analogies. These outputs, while sometimes irrelevant or odd, can serve as catalytic prompts, nudging researchers to think differently, challenge assumptions, or approach problems from novel angles.

In fact, what is often described as an AI “hallucination”, an output that is technically incorrect or fabricated, can sometimes act as a creative jolt. While hallucinations are problematic in factual or clinical scenarios, in early-stage exploration, they can spark divergent thinking. An incorrect claim might inspire a researcher to ask: "What if that were true?" or "Why does that not align with what I know?" In this way, even flawed outputs can serve a generative function.

AI contributes not only to the recombination of existing knowledge (hybridization) but also to its mutation, i.e. deliberate, structural shifts in how scientific problems are framed and approached. Disruption, in this sense, is an essential feature.[6]

Whether by surfacing outlier connections, suggesting unconventional pathways, or simply offering unexpected language, AI can pull researchers out of local cognitive minima and into new conceptual spaces. In this way, AI serves not just as a co-creator, but also as a creative destabilizer.

While AI systems have made impressive strides in supporting scientific creativity, they remain imperfect collaborators. Current tools often excel at divergent tasks but they struggle with the convergent side of creativity, like refining ideas, critically evaluating trade-offs, or aligning suggestions with contextual constraints.

Most AI systems lack the metacognitive self-awareness and situational reasoning needed to understand why a particular idea fits or fails within a scientific context.[5] They can suggest hypotheses, but not always assess whether those hypotheses are relevant, feasible, or ethical. This means that scientists must still provide the critical scaffolding and judgment that makes creative work meaningful.

For instance, in a real-world field experiment involving 758 Boston Consulting Group consultants performing 18 complex knowledge tasks, AI access led to 25.1% faster task completion, 12.2% more tasks completed, and outputs rated over 40% higher in quality, but only for tasks within AI’s capabilities. However, for tasks beyond GPT-4’s competence, AI actually degraded performance: users were 19 percentage points less likely to produce correct solutions compared to those without AI, often due to overreliance on AI’s fluent but misleading language.[1] These findings highlight a critical limitation. Current generative AI models can augment creativity and quality, but only when task alignment, user discernment, and collaborative strategy are in place. Without them, even expert users risk being misled. Effective human-AI co-creativity will depend on AI literacy, task decomposition, and emerging work models like the Centaur (division of labor) and Cyborg (tight integration), both of which outperformed traditional workflows in the study.

Another challenge is that AI systems often underperform in real-time collaboration. Most current models operate in short, prompt-response loops rather than sustained, interactive dialogue. This limits their ability to engage in the iterative, back-and-forth thinking that defines co-creative scientific work.

Furthermore, the risk of bias, both in the training data and in the suggestions AI makes, remains a significant hurdle. AI can reinforce dominant narratives or commonly accepted frameworks,[2] narrowing the idea space it’s meant to expand. In other words, without careful tuning and oversight, AI can end up echoing rather than challenging existing paradigms.

Lastly, creativity in science often hinges on serendipity, timing, and deep disciplinary nuance, which are factors that today's generalist AI models are not yet equipped to handle. Moving from helpful assistant to true co-creator will require future models to incorporate domain-specific context, adaptive learning from user feedback, and the ability to collaborate across longer problem-solving arcs.